In a previous post I described how I generate consistent clips of my characters from reference images. But how do I edit those clips together into coherent scenes that tell a story?

I'm not an experienced video editor by any means, but I'll share what's worked for me so far!

DaVinci Resolve

I use DaVinci Resolve to edit my videos. The most popular professional video editing software are Adobe Premiere, Apple's Final Cut Pro, and Blackmagic's DaVinci Resolve. Of the three, only DaVinci Resolve is free. They have a Studio version which costs $299 and includes some extra features, but I have yet to run into anything I needed beyond the free version.

Blackmagic makes some of the most popular video cameras for mere mortals, and my friends who edit video professionally praise DaVinci Resolve. In addition to its timeline for editing clips together, it includes pages for advanced visual effects, color correction, and sound design.

The tradeoff for having so much functionality is complexity—I've barely scratched the surface of everything DaVinci Resolve is capable of. But the interface is reasonably easy to use. I've been able to figure out how to do everything I need as I go with the help of Google and ChatGPT.

From Script to Screen

In the script excerpt I shared recently, I have the following block of scene description:

Macro shot of grass and wildflowers in a meadow, frozen in place as the camera moves. Dew glistens, frozen on motionless petals. Gradually, the grasses start swaying in a new breeze as sound and music (strings, wistful) fades in. A young woman's bare feet dance past. After she passes, the audio fades and the grasses freeze again, resetting to their original positions.As I generated and edited the scene, I made a few adjustments for better flow. I decided to make this shot at waist-level, because I show feet in the first shot. Instead of fading the music in and out, I synchronized the music with the visuals.

Here is the final shot:

This shot is composed of several clips stitched together. Let's break it down.

Generating the Action Shot

First, I headed to Flow to use Veo 3 to generate a simple shot of Maya walking past the grass.

For my first attempt, I uploaded an image of Maya running through the meadow that I'd generated with Midjourney, and used this prompt:

Instantly jump cut on frame one to a macro shot of tall grass swaying in the breeze. At first, only the swaying grass is visible. Then the young woman dances into the frame from the left. She brushes her hand over the blades as she dances past, so close to the camera you can't see much more than her hand and waist. She exits the frame to the right.

I didn't get anything I could work with. My results often didn't have her entering and exiting the frame properly, or had her dressed very differently, or with two braids instead of one.

Finally, I turned to ChatGPT for help. I provided Maya's character sheet and asked:

Help me create a prompt for Veo 3 to generate a video. I want a macro shot of tall grasses in a wildflower-covered meadow. The grasses sway in a light breeze. Then the young woman in this image runs/dances through the frame, running her hand through the grass. She is close to the camera, so only her hand and waist are visible. Describe her in enough detail that the video generator will be able to represent her accurately.

I followed up, asking it to use the "jump cut on frame one" trick, and ChatGPT gave me the prompt I used. It took a few tries, but I was pretty happy with this result:

Instantly cut from the reference still to a new shot. A macro cinematic close-up of tall grasses in a wildflower meadow, swaying gently in a light breeze. The camera is very close to the grasses, shallow depth of field, golden natural sunlight. Into the frame, the same young woman from the first frame runs and dances past. Only her waist, skirt, and hand are visible as she brushes her fingers through the grasses. The fabric of her flowing beige dress and puffed patterned sleeves catches the air as she moves. Her hand is graceful, adorned with simple jewelry. The motion feels tactile, immersive, and naturalistic, with the grasses bending softly to her touch. Cinematic, macro lens, shallow depth of field, photoreal, warm sunlight, poetic atmosphere.

Fixing Artifacts

If you look closely, you'll notice she only has one sleeve and it's way too long. I chalk that up to the explicit mention of "puffed patterned sleeves" in the prompt, even though I didn't need her sleeves in the shot. I tried several more times with that phrase removed, but never got anything close to this good.

I was annoyed enough by the sleeve that I decided to try Runway Aleph. It's a recent model that's made for editing video. I tried uploading the video from Veo, but it was too long. Luckily the video was in slow motion, so I could afford to speed it up. I could have used a video editor like iMovie or DaVinci Resolve for this (there doesn't appear to be a way to permanently change the speed of a video in any of the utilities that come with my Mac), but for a simple edit like this, I prefer to use a command-line tool.

Using the Terminal to Speed Up My Clip

Feel free to skip to the next section if you prefer using a visual editor to command-line tools.

I opened the Terminal app and typed:

brew install ffmpegThe brew command is from Homebrew, a package manager for macOS that makes it easy to install various command-line tools. You can install it by pasting the line on their homepage into Terminal before running the brew install command.

ffmpeg is a powerful free tool for editing video. Once I had it installed, I used ChatGPT to come up with the command I needed:

ffmpeg -i /path/to/my/input/video.mp4 -filter:v "setpts=0.5*PTS" -an /path/to/my/output/video.mp4This command filtered the video by setting the presentation time stamp of each frame to half its value (effectively speeding it up 2X) and removed the audio.

Runway Aleph Fail

My new video file was half as long, and Runway accepted it. I tried to prompt it to "remove the sleeve", but no matter how I worded it, it didn't work. Most of the time it didn't change the video at all. A couple of times I got very weird results.

Eventually I gave up on fixing the sleeve. While it's the kind of thing that might give a Hollywood script supervisor nightmares, one of the tradeoffs of using AI to tell stories at this stage of the technology is you have to embrace its imperfections. It doesn't hurt the story and most people may not even notice. I decided to move on.

Bullet Time

I dropped the sped-up clip into my timeline on the Edit page in DaVinci Resolve. Next, I needed the shot of the grass frozen in time. This kind of effect, where the camera is moving but the scene is frozen, is often called a "bullet time" effect, after its revolutionary use in The Matrix:

I've had relatively good luck with Runway for these kinds of shots—Midjourney and Veo seem to have a hard time diverging from realistic movement.

I wanted to use the first frame of my Veo grass clip to generate the bullet-time clip, but a bit of Maya's arm is visible. In DaVinci Resolve, I adjusted the zoom property of the video transform (from the Inspector panel) until it was no longer visible (this also cropped out the Veo watermark). I took note of the zoom factor (1.130).

Next I opened the video in Apple Preview (typically the default app if you open a video file on a Mac) and scrubbed to the first frame. I pressed ⌘+C to copy the frame. Then I opened Pixelmator Pro (my favorite image and graphics editor) and clicked File → New from Clipboard. I used the Crop tool in the bottom-right of the sidebar and adjusted the resolution from 1280x720 to 1132x637 (calculated by dividing each dimension by the 1.130 factor I noted in the previous step).

Once I had my cropped image, I uploaded it to Runway and got a result I was happy with right away:

The grass is frozen in place as the camera orbits around it. The grass is under a magic spell and does not move. The macro lens moves in an arc.

I inserted this clip into my timeline before the other one and reversed it (right-click on the clip → Change Clip Speed... → check the "Reverse Speed" checkbox). I did this so that the last frame of the bullet-time clip lined up perfectly with the first frame of the action clip. Now I had a shot where the grass was frozen, then immediately started moving as Maya appeared.

The In-Between Shot

In my mind (and script), the grass starts swaying a bit before Maya shows up. I turned to another tool to make this happen: Midjourney.

I uploaded the same first frame to Midjourney and used it as a starting frame for video generation. I checked the box to loop the video, so it automatically used the same image for the ending frame. I entered a simple prompt and it worked perfectly:

The grass sways in the breeze

I inserted this clip in between my bullet-time clip and my action clip and it bridged the gap, giving me a few seconds of moving grass before Maya enters the frame.

The Reversal

After Maya exits the shot, the grass is supposed to "snap back" to its original frozen state. Great! I can just reverse the action clip and speed it up! Just one problem: Maya is in it. I wanted to rewind the grass without rewinding Maya.

I decided to give Runway Aleph a shot at this. Using the same sped-up Veo clip, I asked Runway to "Remove the person". It worked perfectly, giving me a clip of the waving grass as the camera tracks Maya, but with Maya missing. I appended this clip to my timeline, reversed it, and sped it up.

Again, I wasn't happy with the rewind effect happening immediately when Maya leaves the frame, so I created another in-between clip using the last frame of the action clip and the exact process I described in the previous section.

Finally, I appended the bullet-time clip again, playing forward this time (so its first frame would line up with the last frame of the reversed, Maya-less, action clip).

The Glitch Effect

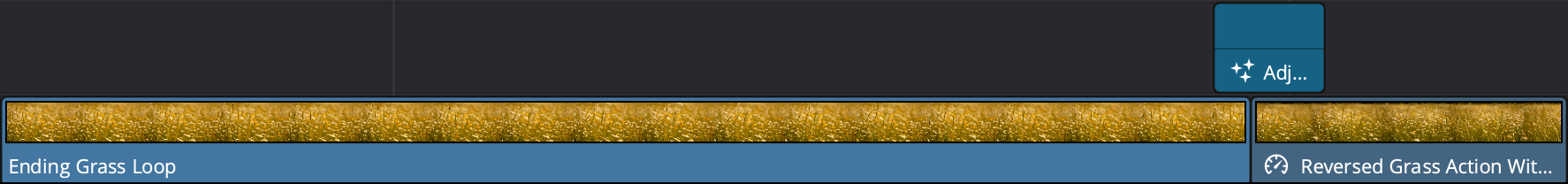

Once I had all my clips arranged on the timeline, I wanted to add a glitch effect when the "rewind" starts. I added an Adjustment Clip from the Effects section of the Effects panel to a new video track positioned above my clips on the timeline. I positioned it for just a few frames at the end of "Ending Grass Loop" and the beginning of "Reversed Grass Action Without Maya". Then I dragged a Digital Glitch effect from the Fusion Effects subsection onto the adjustment clip.

The adjustment clip makes it so you can apply an overlay effect across multiple clips—if I had just dropped the glitch effect onto my timeline directly, it would have to apply to a single clip, not portions of two adjacent clips.

Color Matching

As I scrubbed back and forth in my timeline, I noticed that the transition between adjacent clips could be jarring. Even where I had lined up the frames perfectly, the colors used by Veo often differed from the output of the other models.

Notice how the clip on the left is slightly redder and brighter than the clip on the right

To fix this, I had to break open a new page in DaVinci Resolve that I hadn't braved before: the Color page.

I opened the Color page using the tab at the bottom and pressed the button at the top right to show the timeline. This let me scrub easily between clips so I could see the effect my changes were having.

I selected the clip on the left, then right-clicked on the clip on the right and chose Shot Match to this Clip. That helped some, but the left clip still looked too red. So I opened the Color Wheels panel and slightly reduced the red component of the Gamma setting (-0.02). Changing gamma adjusts the mid-tones of the image, as opposed to lift (the shadows) or gain (the highlights). Since I wanted to adjust the whole image to look less red, I tried gamma first, and it seemed to work.

Now the clips are better matched

I similarly matched up the colors between the other adjacent clips until it looked seamless to my eye.

Wrapping Up

After putting it all together, I was able to export this final shot (included again here for reference):

This has gotten pretty long already, so I'll save discussion of sequencing multiple shots and integrating music for another post. Editing my scene together has highlighted again how using AI for filmmaking is so much more than just prompting. Screenwriting and editing are critical skills for visual storytellers.